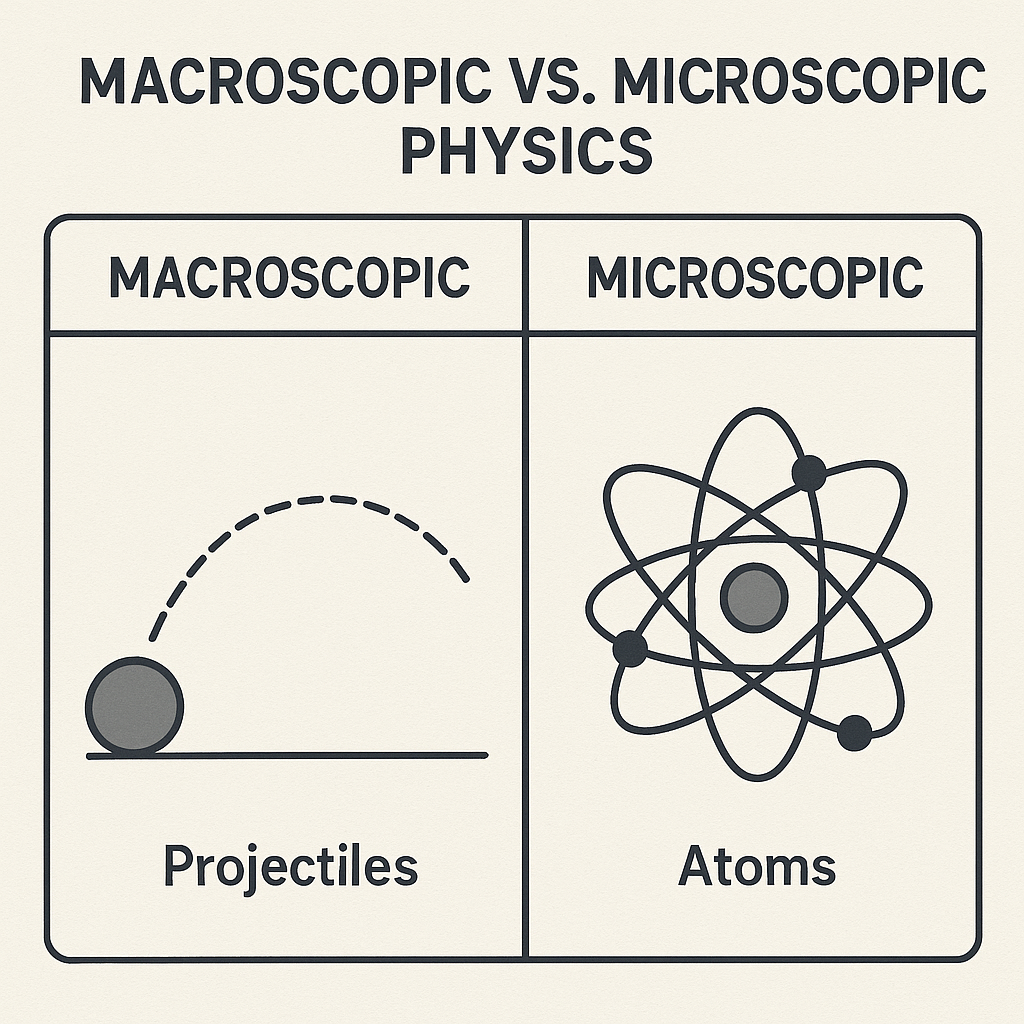

Look at the physical world around us—you’ll find an fascinating divide between how large objects and tiny particles behave. This fundamental split revolutionized how we understand physics, and it still shapes modern technology and research.

On daily life, we use macroscopic objects like cars, planes, ships, and trains. Classical physics—refined over centuries—fully describes these systems.

Key traits of large objects:

Precise measurability: We can accurately find their position, velocity, acceleration, path, kinetic energy, and total energy.

Continuous energy states: They can have any energy value on a range, changing smoothly and non-stop.

Predictable motion: Newton’s laws tell us exactly how forces affect their movement.

Deterministic behavior: Given initial conditions, we can predict future states with amazing accuracy.

But when we look at tiny particles—electrons, protons, atoms—classical physics stops working. Reality at these scales follows totally different rules:

Quantum uncertainty: We can’t measure a particle’s exact position, velocity, and path all at once.

Wave-particle duality: Particles act like waves or particles, depending on how we watch them.

Probabilistic behavior: We can only calculate the chance of finding a particle in a state, not its exact condition.

This quantum behavior makes us use new concepts: stationary states, energy levels, and quantum transitions.

Tiny particles exist in stationary states—specific setups with fixed energy values, as physicists call them. These energy values—called energy levels—have key traits:

Discrete: Only certain energy values work—no in-between states.

Independent: Each energy level is separate from others.

Quantized transitions: Energy changes in specific steps, not smoothly.

The lowest energy state is the ground state; higher ones are excited states. Unlike macroscopic objects that can gradually change energy, tiny particles must “jump” instantly from one level to another—this is a quantum transition.

Quantum mechanics has a fascinating quirk: energy level degeneracy. This happens when multiple different quantum states have the same energy.

The degeneracy number (g) is how many different states share an energy level. For example, if an energy level E has four states with identical energy, g = 4.

Quantum mechanics describes single particles, but real systems have huge numbers—usually 10²³ or more. You can’t track every particle, so you need statistics to understand their group behavior.

That’s where statistical mechanics comes in. We study average behavior of large particle groups and calculate the chance of finding particles in specific energy states.

Ludwig Boltzmann’s 1868 Boltzmann distribution explains how particles spread across energy states when a system hits thermal equilibrium. Here’s the formula:

P(E) ∝ exp(-E/kT)

Where:

P(E) = chance of finding a particle in a state with energy E

k = Boltzmann constant (1.38 × 10⁻²³ J/K)

T = absolute temperature (Kelvin)

The exponential function means higher energy states have fewer particles.

Exponential decay: More energy means far fewer particles.

Temperature dependence: Higher temps make distribution more even.

Ground state dominance: On low temps, most particles stay in the lowest energy state.

Dynamic equilibrium: Particles switch states non-stop, but statistics stay balanced.

Constant jumps between energy levels create a dynamic process: particles absorb and emit photons non-stop. This makes thermal radiation—electromagnetic waves matter emits because of its temperature.

All matter above absolute zero gives off thermal radiation. Its traits depend on the object’s temperature, following laws like Planck’s, Wien’s displacement, and Stefan-Boltzmann’s.

High-temperature sources:

The Sun (surface temp ~5,800 K) emits visible light and UV radiation.

Flames and incandescent bulbs: Give off visible light because they’re hot.

Hot metals: Glow red, orange, or white—depends on their temp.

Low-temperature sources:

The human body (~310 K) emits mostly infrared radiation.

Room-temp objects: Give off infrared radiation we can’t see.

Electronic devices: Make heat you can see with thermal cameras.

Blackbody radiation is the theoretical framework for thermal radiation—an ideal case where an object absorbs all radiation and re-emits it based only on temperature. Max Planck’s quantum theory explains this perfectly—one of quantum mechanics’ foundational discoveries.

Understanding energy levels and the Boltzmann distribution is key for many fields:

Spectroscopy and Analysis:

Study atomic and molecular spectra.

Find stellar composition and temp.

Build laser tech.

Make efficient LEDs.

Thermal Management:

Design efficient heat exchangers.

Make thermal imaging and night vision.

Collect and convert solar energy.

Control spacecraft heat.

Quantum Technologies:

Quantum computing with controlled energy states.

Quantum communication.

Precision measurements.

Advanced sensors.

Moving from classical to quantum mechanics is one of science’s deepest paradigm shifts. Macroscopic objects follow predictable Newtonian rules, but tiny particles live in a quantum world—discrete energy levels, probability distributions, and statistical behavior.

The Boltzmann distribution links quantum mechanics and thermodynamics—it explains how huge numbers of particles together make the thermal phenomena we see every day. From an candle’s warm glow to a star’s blazing radiation, these principles rule energy transfer and matter behavior across the universe.

As we build quantum tech and learn more about tiny matter, these ideas stay key to both theory and real-world use. The dance between quantum energy levels and statistical distributions will keep driving innovations in energy, computing, and materials science for years.

Contact: Jason

Phone: +8613337332946

E-mail: [email protected]

Add: Hangzhou City, Zhejiang Province, China